Achieving Domain-Specific PII Anonymisation Effectively with Generative AI

Project grade received: "A+"

Executive Summary

Developed as part of the IS469 Gen AI with LLMs module, this project addresses the challenge of sharing sensitive documents in domains such as healthcare, legal, and finance, which are often full of personally identifiable information (PII). Traditional rule-based redaction methods tend to be brittle and frequently over-remove content, harming the utility of the text.

This project explores a hybrid approach that combines:

- NER-based PII detection using encoder models and open-source libraries.

- Generative anonymisation with prompt engineering.

- Text segmentation strategies for long documents.

- Adversarial and utility-aware optimisation inspired by recent research (e.g., Robust Utility-Preserving Text Anonymization (RUPTA), “Language Models Are Advanced Anonymizers”).

Key Findings:

- NER Label Quality: For NER with encoder models, label quality dominates model capacity. Our trained BERT NER model reaches Micro-F1 ≈ 0.87, whereas the same architecture is capped around F1 ≈ 0.54 on weakly labelled clinical notes.

- LLM Limitations: LLM prompt engineering alone can reach strong anonymisation performance but struggles on long documents due to oversummarisation and context loss.

- Segmentation Impact: Semantic embedding-based segmentation significantly improves anonymisation quality on long medical texts, reducing segmentation error significantly and cutting PII leakage by almost half in an experiment.

- Adversarial Optimization: Adversarial evaluation and RUPTA-inspired optimisation further strengthen privacy–utility trade-offs, boosting anonymisation rates to ~97% while keeping downstream utility (BERT F1) only slightly reduced.

The final outcome is an end-to-end anonymisation pipeline that integrates NER, generative anonymisation, segmentation, adversarial feedback, and utility-guided optimisation into a single user-ready workflow.

1. Background and Motivation

1.1 Problem Context

Healthcare, legal, and financial organisations frequently need to share documents, such as discharge summaries, referral letters, legal memos, or transaction reports, that are rich in PII. These documents must be shared for tasks like analytics, research, audits, or inter-institutional collaboration, while still preserving privacy.

Traditional rule-based redaction systems typically:

- Depend on hand-crafted patterns (e.g., regexes).

- Are brittle to variation in language and formatting.

- Often remove more text than necessary, harming readability and downstream utility.

As regulations such as GDPR (EU), HIPAA (US), CCPA (US), and PDPA (Singapore) tighten expectations around anonymisation and consent, there is a strong need for solutions that protect privacy while preserving as much informational content and coherence as possible.

1.2 Redaction vs Anonymisation

A key conceptual distinction:

- Redaction: Sensitive spans are removed or masked (e.g., “Adam Tan” →

[NAME]orXxXx). This is safe but often breaks sentence flow and can make clinical or legal reasoning difficult. - Anonymisation: PII is replaced with realistic substitutes (e.g., “Adam Tan” → “The patient”), preserving natural language and coherence while preventing re-identification.

This project aims to move from blunt redaction toward utility-preserving anonymisation, while still satisfying privacy constraints.

1.3 Project Objectives and Overall Approach

The project is structured around five main components:

- Named Entity Recognition (NER): Establishing a strong, encoder-based baseline for PII detection.

- Generative Anonymisation: Applying larger LLMs with prompt engineering to replace PII with safe surrogates.

- Segmentation: Mitigating context loss and oversummarisation when anonymising long texts.

- Applying Research Papers: Adapting modern adversarial and utility-aware anonymisation frameworks (e.g., “Language Models Are Advanced Anonymizers”, RUPTA) to improve performance.

- Final System: A streamlined pipeline ready for end-user access, using appropriate software development libraries for model serving, frontend, and backend development.

2. NER-Based PII Detection Baseline

2.1 Datasets

We used two datasets from HuggingFace to train models for the NER and PII detection task.

1. OpenPII (ai4privacy/open-pii-masking-500k-ai4privacy)

- A large, well-annotated corpus with explicit span labels for PII entities such as names, emails, and locations.

- Designed specifically for training and evaluating NER-based PII models.

- Enables a standard multi-class BIO labelling scheme (B-TYPE, I-TYPE, O).

- Sampling: 10k / 2k / 2k examples for train / validation / test.

2. Redacted FHIR Clinical Notes (seun-ajayi/redacted_fhir_data)

- A dataset of healthcare clinical notes paired with redacted versions and associated FHIR metadata.

- Rich in domain-specific terminology and complex narrative structure.

- Challenge: It does not provide clean character-aligned span annotations; PII spans must be inferred (weakly supervised).

- Constraint: We used a Diff + “Xx” constraint, focusing only on differences where the redacted version contains placeholder patterns.

- Split: 2,158 train, 270 validation, 270 test notes.

2.2 Redaction via Named Entity Recognition

For NER, the objectives were:

- Fine-tune smaller encoder models (

bert-base-cased) for PII span detection. - Use OpenPII to build a clean, supervised NER benchmark.

- Treat FHIR as a weakly supervised resource where labels must be heuristically derived.

- Compare encoder-based BERT models with spaCy NER.

2.3 Encoder SFT

We focused on bert-base-cased to account for capitalisation context. Four main experiments were conducted:

| Experiment | Training Data | Test Data | Precision | Recall | Micro-F1 |

|---|---|---|---|---|---|

| FHIR (BERT) | FHIR | FHIR | 0.49 | 0.60 | 0.54 |

| OpenPII (BERT) | OpenPII | OpenPII | 0.86 | 0.89 | 0.87 |

| OpenPII → FHIR (Zero-Shot) | OpenPII | FHIR | 0.17 | 0.24 | 0.20 |

| OpenPII + FHIR | OpenPII, then FHIR | FHIR | 0.49 | 0.59 | 0.54 |

Key Observations:

- Weak vs Strong Labels: On FHIR, performance saturates around F1 ≈ 0.54 due to noisy labels. On OpenPII, the same architecture achieves F1 ≈ 0.87.

- Domain Mismatch: Zero-shot OpenPII → FHIR drops to F1 ≈ 0.20, showing that clinical narratives differ substantially from OpenPII data.

- Conclusion: BERT with OpenPII is chosen as the NER benchmark model for downstream tasks.

2.4 spaCy Experiments

spaCy models were evaluated under similar conditions.

- On OpenPII, spaCy reaches Micro-F1 ≈ 0.80 (strong but below BERT's 0.87).

- On FHIR, spaCy is similarly capped by heuristic labels (Micro-F1 ≈ 0.56).

- Generic off-the-shelf NER models performed poorly on both datasets.

3. Generative Anonymisation with LLMs

This section presents the use of Llama-3 instruct to perform generative anonymisation, replacing PII with realistic surrogate values.

3.1 Objective

- Maintain fluency and natural language quality.

- Preserve clinical or contextual meaning.

- Remove or transform PII to prevent re-identification.

3.2 Prompt Engineering Techniques

We tested four strategies:

- Few-Shot Prompts: Providing multiple examples of transformation.

- Contrastive Prompts: Presenting "correct vs wrong" pairs to discourage oversimplification.

- Role-Based Prompts: Assigning a role (e.g., "Healthcare Privacy Officer").

- Chain-of-Thought Reasoning: Instructing the model to reason step-by-step.

Result: Few-Shot and Contrastive prompts performed best, offering high anonymisation rates with low leakage.

3.3 Evaluation Metrics

- Anonymisation Rate: % of PII spans successfully transformed.

- Leakage Rate: % of PII remaining identifiable.

- BERTScore F1: Semantic similarity to original text (Utility).

- Redaction Frequency: Occurrences of masking instead of anonymising.

3.5 Automatic Prompt Engineering (APE)

To improve beyond manual prompts, we implemented an APE loop based on Zhou et al. (2022), adapted for the FHIR dataset.

Process:

- Initialisation: Generate candidate prompts.

- Evaluation: Test on FHIR training subset.

- Selection: Pick top 2 "parents".

- Variation: Create structured variants (evolutionary mutation).

- Iteration: Repeat until stable.

Performance of Best-Evolved Prompt:

- Anonymisation Rate: 0.9541

- Leakage Rate: 0.0459

- BERTScore F1: 0.8890

4. Segmentation for Long Documents

4.1 Motivation

On long medical documents (~500–1,000 words), LLMs tended to oversummarise and drop details. We introduced text segmentation to break documents into manageable units.

4.2 Segmentation Strategies

- Naive Paragraph Splitting: Simple split by paragraph.

- Probabilistic Text Segmentation: Using cosine similarity matrices and reverse sigmoid weights to find boundaries.

- Semantic Word Embeddings (Greedy & DP): Using transformer embeddings to find semantic shifts.

- Greedy: Iteratively divides based on immediate semantic shifts.

- Dynamic Programming (DP): Optimises boundary placement to minimise overall segmentation cost.

4.3 Evaluation (Pₖ Metric)

Lower Pₖ is better.

- Split by paragraph: 0.2353

- Probabilistic: 0.4118

- Semantic Embeddings (Greedy/DP): 0.1176

Greedy was chosen for production due to faster runtime with equivalent performance.

4.4 Impact on Anonymisation

- Without Segmentation: Anonymisation Rate: 0.798 | Leakage: 0.202 | PII Leaked: 24

- With Greedy Segmentation: Anonymisation Rate: 0.878 | Leakage: 0.122 | PII Leaked: 14

5. Applying Modern Research: Adversarial & Utility-Aware Anonymisation

5.1 Language Models Are Advanced Anonymizers

Adapted from Staab et al. (2024).

- Adversarial Agent: Tries to guess attributes from text.

- Anonymisation Agent: Rewrites text to reduce cues based on feedback.

Results:

- Adversarial Agent-informed anonymiser achieved Anonymisation Rate: 0.9125 and Leakage: 0.0875, with a slight dip in BERT F1 (0.9118).

5.2 & 5.3 RUPTA Adaptation

Adapted from Yang et al. (2025). We designed a simplified multi-agent workflow using LLaMA-8B:

- Text Segmentation: Greedy segmentation on long text.

- Anonymiser Agent: Anonymises segments using APE-optimised prompts.

- Privacy Evaluation: Uses BERT NER to detect "hard" PII and an LLM to find quasi-identifiers.

- Utility Evaluation: Uses Clinical Longformer-based BERT F1 to check for loss of clinical content.

- Optimiser Agent: Refines text to remove hard PII (P-Status = 0) and restore clinical utility.

5.5 Results (Overall Average)

| Phase | Anonymisation Rate | Leakage Rate | BERT Score F1 |

|---|---|---|---|

| First Anonymized Version | 86.65% | 13.35% | 0.9305 |

| Final Optimized Version | 96.71% | 3.29% | 0.9166 |

This confirms that multi-agent optimisation with segmentation and a BERT encoder detector substantially improves privacy metrics.

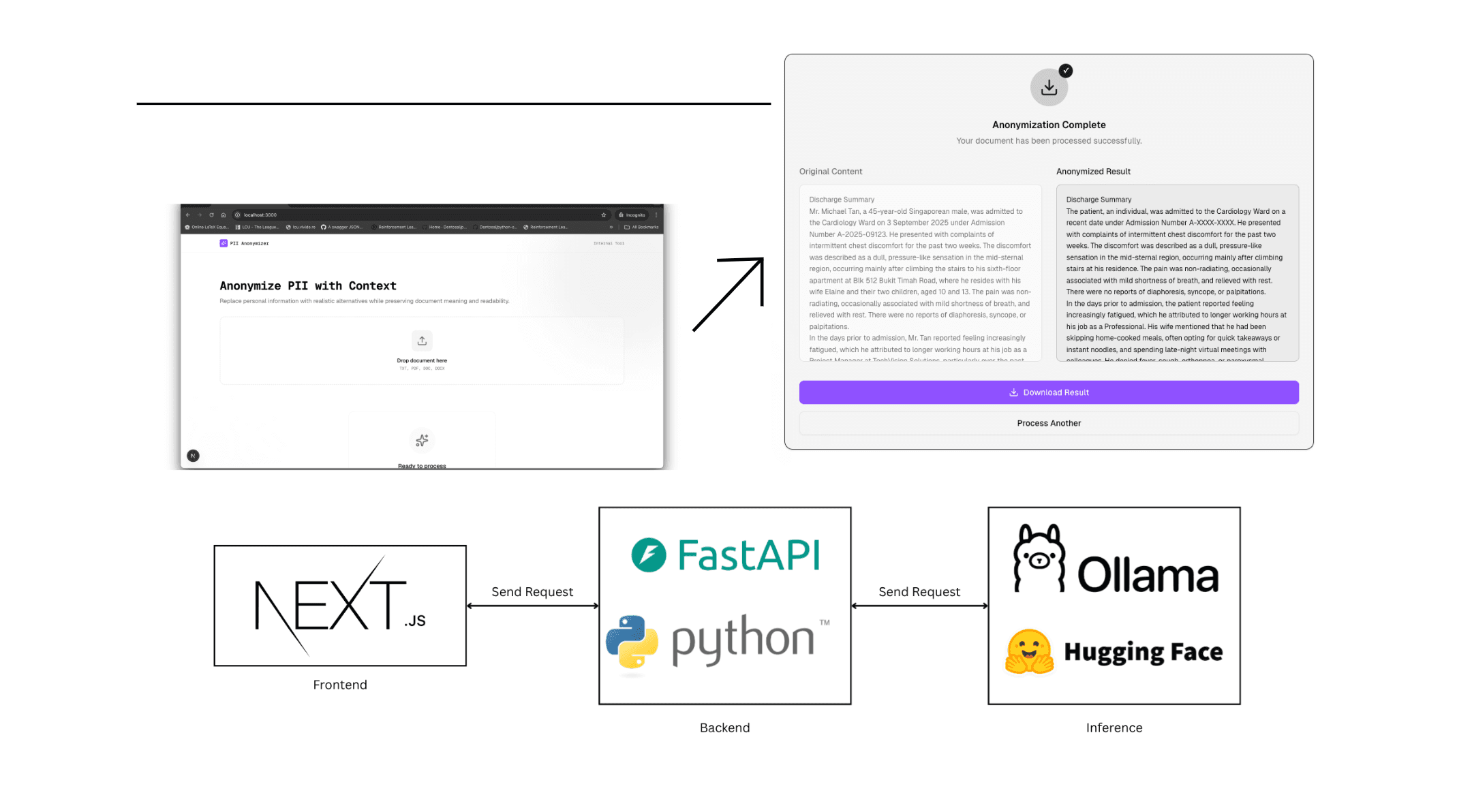

6. Integrated Pipeline and Implementation

6.1 Frontend

- Stack: Next.js 14, Tailwind CSS, Mammoth (DOCX to HTML), html-to-docx, Axios.

- Features: Full DOCX round-trip support (preserving formatting).

6.2 Backend

- Stack: Python, FastAPI, Uvicorn, LangChain, Ollama, Transformers, NLTK, Pandas.

- Role: Orchestrates segmentation, anonymisation, and evaluation.

6.3 Inference

- Local Inference: Uses Ollama to run LLaMA models locally on GPU/CPU.

- Benefits: No vendor lock-in, no per-token charges, data privacy (documents never leave the environment).

7. Future Work

- Direct Preference Optimisation (DPO): Train a compact model using preference pairs to execute trade-offs in one step.

- Knowledge Distillation: Distil capabilities from larger "teacher" models into smaller "student" models for lower latency.

- Differential Privacy: Integrate formal privacy guarantees.

8. Conclusion

This project demonstrates that domain-specific PII anonymisation can be significantly improved by combining NER-based detection, LLM-based generative anonymisation, intelligent text segmentation, and adversarial/utility-aware optimisation.

Core Insights:

- High-quality labels are essential for reliable PII detectors.

- Prompt-engineered LLMs need segmentation and evaluation mechanisms for long documents.

- Adversarial and RUPTA-inspired frameworks provide a systematic way to balance privacy and utility.

The final integrated pipeline represents a concrete step towards deployable, domain-specific anonymisation systems that respect modern privacy regulations while preserving analytical value.

References

- Alemi, A. A., & Ginsparg, P. (2015). Text segmentation based on semantic word embeddings. arXiv preprint arXiv:1503.05543.

- Beeferman, D., Berger, A., & Lafferty, J. (1999). Statistical models for text segmentation. Machine learning, 34, 177-210.

- Staab, R., Vero, M., Balunović, M., & Vechev, M. (2024). Large language models are advanced anonymizers. arXiv preprint arXiv:2402.13846.

- Yang, T., Zhu, X., & Gurevych, I. (2025). Robust utility-preserving text anonymization based on large language models. ACL 2025.

- Zhou, Y., et al. (2022). Large language models are human-level prompt engineers. ICLR 2023.